Evaluating Robustness to Context-Sensitive Feature Perturbations of Different Granularities

Abstract

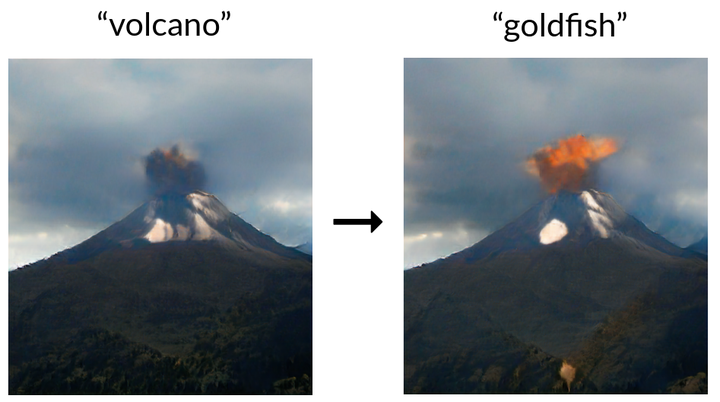

We cannot guarantee that training datasets are representative of the distribution of inputs that will be encountered during deployment. So we must have confidence that our models do not over-rely on this assumption. To this end, we introduce a new method that identifies context-sensitive feature perturbations (e.g.~shape, location, texture, colour) to the inputs of image classifiers. We produce these changes by performing small adjustments to the activation values of different layers of a trained generative neural network. Perturbing at layers earlier in the generator causes changes to coarser-grained features; perturbations further on cause finer-grained changes. Unsurprisingly, we find that state-of-the-art classifiers are not robust to any such changes. More surprisingly, when it comes to coarse-grained feature changes, we find that adversarial training against pixel-space perturbations is not just unhelpful: it is counterproductive.